OneStor Datastore¶

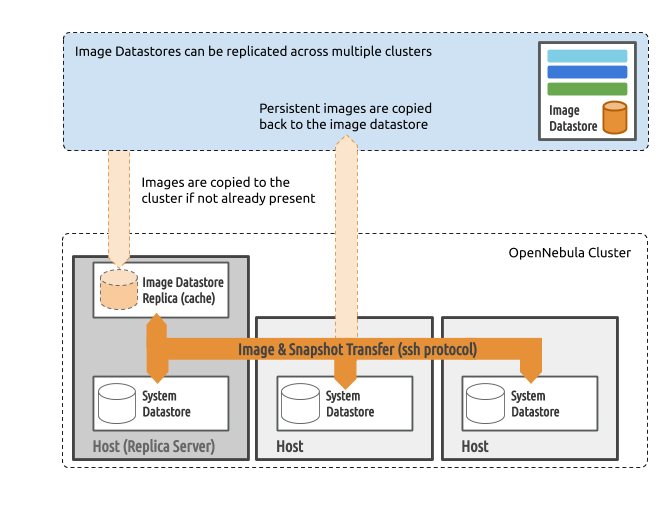

Like the Local Storage Datastore this configuration uses the local storage area of each Host to run VMs. On top of it, it provides:

Caching features to reduce image transfers and speed up boot times.

Automatic recovery mechanisms for qcow2 images and KVM hypervisor.

Additionally you’ll need a storage area for the VM disk image repository. Disk images are transferred from the repository to the Hosts and cache areas using the SSH protocol.

Front-end Setup¶

The Front-end needs to prepare the storage area for:

Image Datastores, to store the image repository.

System Datastores, will hold temporary disks and files for VMs

stoppedandundeployed.

Simply make sure that there is enough space under /var/lib/one/datastores to store Images and the disks of the stopped and undeployed Virtual Machines. Note that /var/lib/one/datastores can be mounted from any NAS/SAN server in your network.

Host Setup¶

Just make sure that there is enough space under /var/lib/one/datastores to store the disks of running VMs on that Host.

Warning

Make sure all the Hosts, including the Front-end, can SSH to any other host (including themselves), otherwise migrations will not work.

One additional Host per cluster needs to be designated as REPLICA_HOST and it will hold the disk images cache under /var/lib/one/datastores. It is recommended to add extra disk space in this Host.

OpenNebula Configuration¶

Once the Nodes and Front-end storage is setup, the OpenNebula configuration comprises the creation of an Image and System Datastores.

Create System Datastore¶

You need to create a System Datastore for each cluster in your cloud, using the following (template) parameters:

Attribute |

Description |

|---|---|

|

Name of datastore |

|

|

|

|

|

hostname of the designated cache Host |

For example, consider a cloud with two clusters; the datastore configuration could be as follows:

# onedatastore list -l ID,NAME,TM,CLUSTERS

ID NAME TM CLUSTERS

101 system_replica_2 ssh 101

100 system_replica_1 ssh 100

1 default ssh 0,100,101

0 system ssh 0

Note that in this case a single Image Datastore (1) is shared across clusters 0, 100 and 101. Each cluster has its own System Datastore (100 and 101) with replication enabled, while System Datastore 0 does not use replication.

Replication is enabled by the presence of the REPLICA_HOST key, with the name of one of the Hosts belonging to the cluster. Here’s an example of the replica System Datastore settings:

# onedatastore show 100

...

DISK_TYPE="FILE"

REPLICA_HOST="cluster100-host1"

TM_MAD="ssh"

TYPE="SYSTEM_DS"

...

Note

You need to balance your storage transfer patterns (number of VMs created, disk image sizes…) with the number of Hosts per cluster to make an effective use of the caching mechanism.

Create Image Datastore¶

To create a new Image Datastore, you need to set the following (template) parameters:

Attribute |

Description |

|---|---|

|

Name of datastore |

|

|

|

|

|

|

For example, the following illustrates the creation of a Local Datastore:

cat ds.conf

NAME = local_images

DS_MAD = fs

TM_MAD = ssh

onedatastore create ds.conf

ID: 100

Also note that there are additional attributes that can be set. Check the datastore template attributes.

Additional Configuration¶

QCOW2_OPTIONS: Custom options for theqemu-imgclone action. Images are created through theqemu-imgcommand using the original image as a backing file. Custom options can be sent toqemu-imgclone action through the variableQCOW2_OPTIONSin/etc/one/tmrc.DD_BLOCK_SIZE: Block size for dd operations (default: 64kB) could be set in/var/lib/one/remotes/etc/datastore/fs/fs.conf.SUPPORTED_FS: Comma-separated list with every filesystem supported for creating formatted datablocks. Can be set in/var/lib/one/remotes/etc/datastore/datastore.conf.FS_OPTS_<FS>: Options for creating the filesystem for formatted datablocks. Can be set in/var/lib/one/remotes/etc/datastore/datastore.conffor each filesystem type.SPARSE: If set toNOthe images created in the Datastore wont be sparsed.QCOW2_STANDALONE: If set toYESthe standalone qcow2 disk is created during CLONE operation (default: QCOW2_STANDALONE=”NO”). Unlike previous options, this one is defined in image datastore template and inherited by the disks.

Warning

Before adding a new filesystem to the SUPPORTED_FS list make sure that the corresponding mkfs.<fs_name> command is available in the Front-end and hypervisor Hosts. If an unsupported FS is used by the user the default one will be used.

REPLICA_HOST vs. REPLICA_STORAGE_IP¶

OneStor was originally designed for Edge clusters where the hosts are typically reached on their public IPs. However, for copying the image between the hosts (replica -> hypervisor) it might be useful to use a local IP address from the private network. Therefore, in the host template of the replica host, it’s possible to define REPLICA_STORAGE_IP which will be used instead the REPLICA_HOST in that case.

Additionally, the following attributes can be tuned in configuration files /var/lib/one/remotes/etc/tm/ssh/sshrc:

Attribute |

Description |

|---|---|

|

Timeout to expire lock operations should be adjusted to the maximum image transfer time between Image Datastores and clusters. |

|

Default directory to store the recovery snapshots. These snapshots are used to recover VMs in case of Host failure in a cluster |

|

SSH options when copying from the replica to the hypervisor speed. Weaker ciphers on secure networks are preferred |

|

SSH options when copying from the Front-end to the replica. Stronger ciphers on public networks are preferred |

|

Maximum size of cached images on replica in MB |

|

Maximum usage in % of the replica filesystem |

Recovery Snapshots¶

Important

Recovery Snapshots are only available for KVM and qcow2 Image formats

As the recovery snapshot are created by the monitoring client and not by a driver action, it requires password-less ssh connection from the hypervisors to the

REPLICA_HOST. Which means that also private ssh key of oneadmin user needs to be distributed on the nodes.

Additionally, in replica mode you can enable recovery snapshots for particular VM disks. You can do it by adding the option RECOVERY_SNAPSHOT_FREQ to DISK in the VM template.

onetemplate show 100

...

DISK=[

IMAGE="image-name",

RECOVERY_SNAPSHOT_FREQ="3600" ]

Using this setting, the disk will be snapshotted every hour and a copy of the snapshot will be prepared on the replica. Should the host where the VM is running later fail, it can be recovered, either manually or through the fault tolerance hooks:

onevm recover --recreate [VMID]

During the recovery the VM is recreated from the recovery snapshot.

Datastore Internals¶

Images are saved into the corresponding Datastore directory (/var/lib/one/datastores/<DATASTORE ID>). Also, for each running Virtual Machine there is a directory (named after the VM ID) in the corresponding System Datastore. These directories contain the VM disks and additional files, e.g. checkpoint or snapshots.

For example, a system with an Image Datastore (1) with three images and three Virtual Machines (VM 0 and 2 running, and VM 7 stopped) running from System Datastore 0 would present the following layout:

/var/lib/one/datastores

|-- 0/

| |-- 0/

| | |-- disk.0

| | `-- disk.1

| |-- 2/

| | `-- disk.0

| `-- 7/

| |-- checkpoint

| `-- disk.0

`-- 1

|-- 05a38ae85311b9dbb4eb15a2010f11ce

|-- 2bbec245b382fd833be35b0b0683ed09

`-- d0e0df1fb8cfa88311ea54dfbcfc4b0c

Note

The canonical path for /var/lib/one/datastores can be changed in /etc/one/oned.conf with the DATASTORE_LOCATION configuration attribute

In this mode the images are cached in each cluster and so are available close to the hypervisors. This effectively reduces the bandwidth pressure of the Image Datastore servers and reduces deployment times. This is especially important for edge-like deployments where copying images from the Front-end to the hypervisor for each VM could be slow.

This replication mode implements a three-level storage hierarchy: cloud (image datastore), cluster (replica cache) and hypervisor (system datastore). Note that replication occurs at cluster level and a System Datastore needs to be configured for each cluster.